Remaking the game Starship Shooter with ML5 PoseNet

Summary

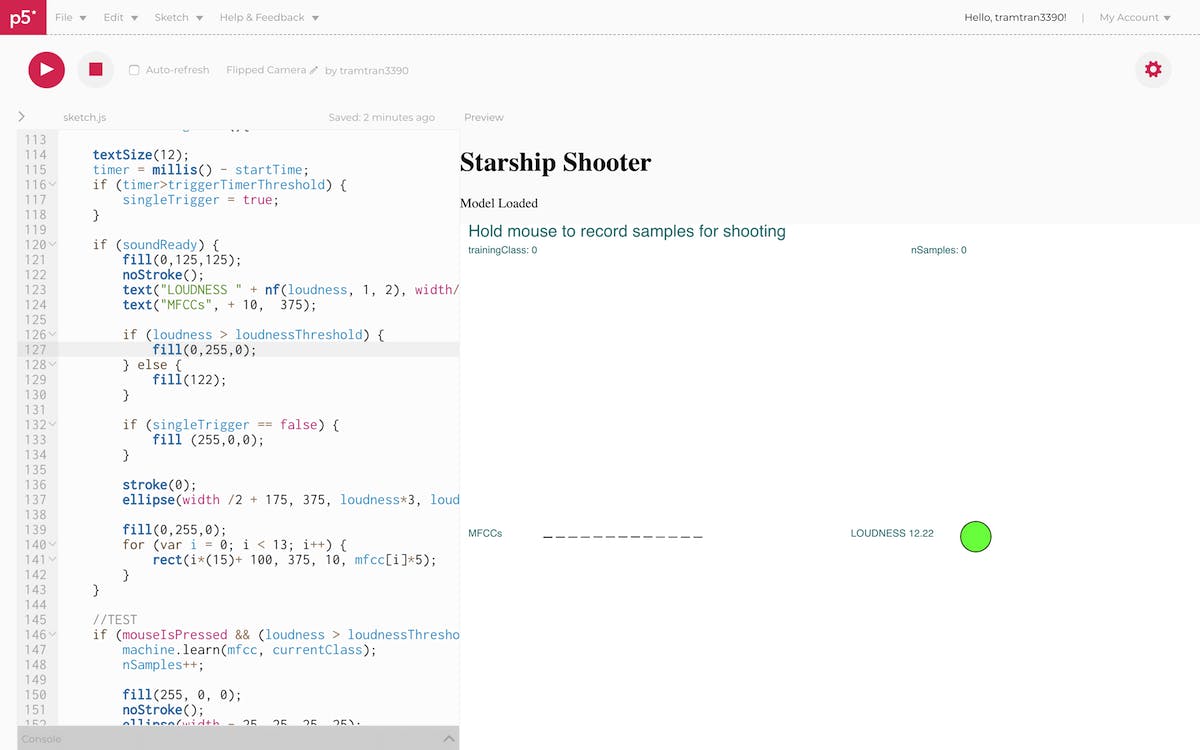

In December 2018, I spent one week in Kochi (India) to learn Machine Learning for Interaction Design, one of the workshops offered by the Copenhagen Institute of Interaction Design (CIID). Together with another two classmates, Robin Thomas and Gayathrikasi, we created a simple version of the Starship Shooter game with p5.js. Players navigate the starship by moving left and right and shoot obstacles using voice command.

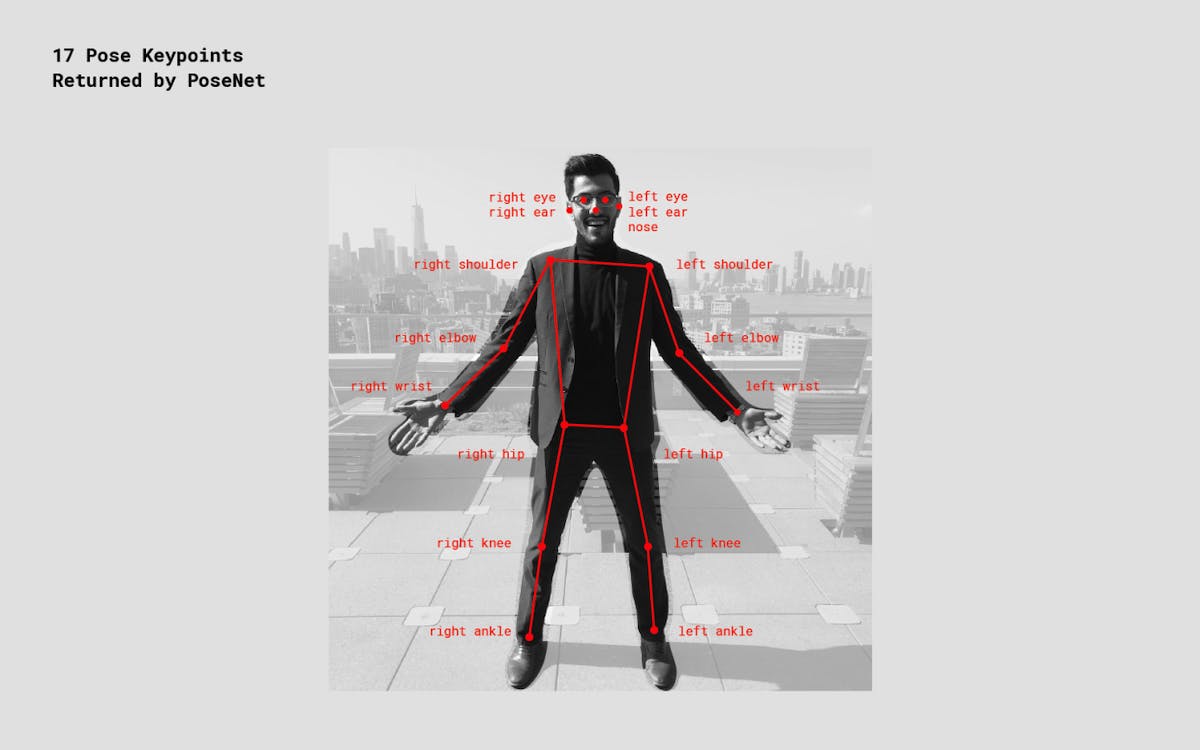

ML5 PoseNet is a machine learning model that allows for Real-time Human Pose Estimation. It can decode up to 17 key points (keypoint is a part of a person pose, as shown in the above image). In this project, the spaceship was drawn at the x-y position of the player's nose.

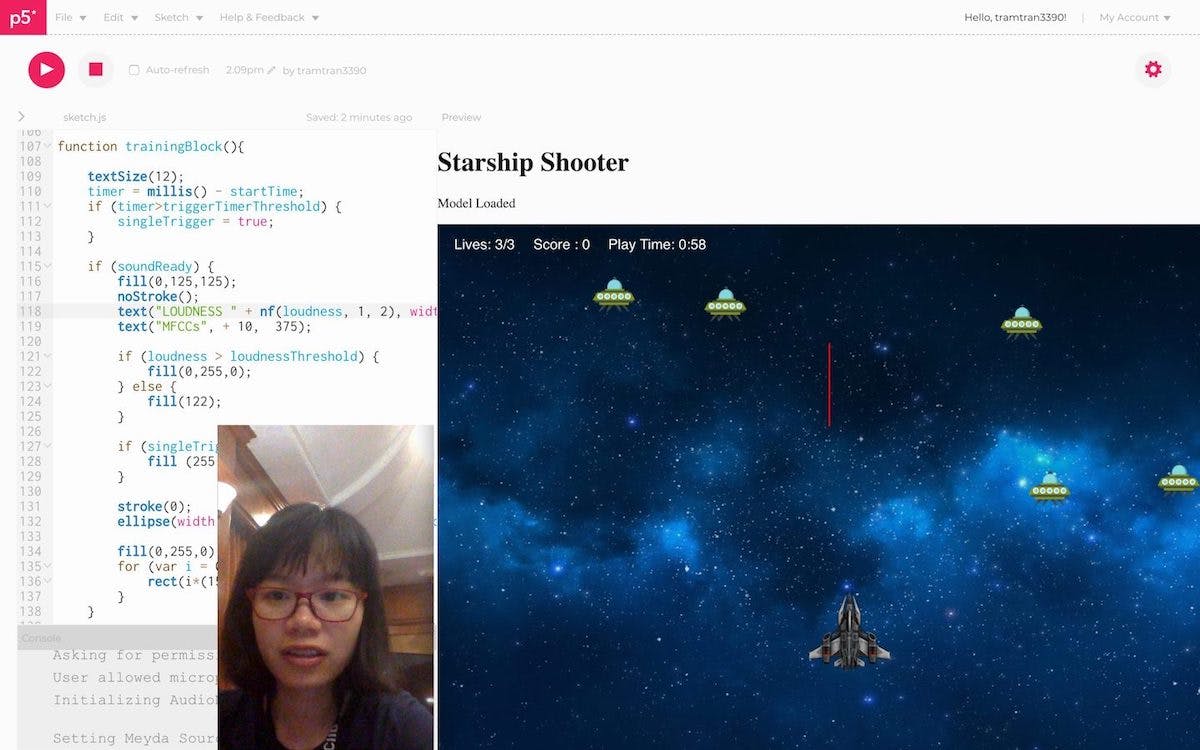

A classifier was trained to recognize two different sounds based on Mel Frequency Cepstral Coefficients (MFCC). Environmental noise was classified as "0" and shooting command was classified "1". The sound classification was not so accurate therefore the game could not have loud sound effects or background music which might be mistakenly recognised as an order to fire.